95% of Generative AI Pilots Fail. Here’s How Mid‑Market CEOs Win

MIT’s GenAI Divide: State of AI in Business 2025 landed hard: researchers found that roughly 95% of corporate generative AI pilots are failing to deliver measurable P&L impact.

After analyzing more than 300 initiatives, 52 executive interviews, and 153 surveys, the team behind the study found a gap in success of AI initiatives. Despite abundant ideas, brilliant talent, and tens of billions of investments, nearly every project stalls in pilot mode.

The line between winners and losers comes down to whether AI is wired into the way work actually happens. The companies on the wrong side keep AI at the edges; think tools running in isolation, demos that never stick. The few on the right side are embedding it into workflows so that it learns, remembers, and improves. The returns show up when teams adapt process and technology together.

Why so many AI pilots stall

The most fundamental consideration when adopting AI is that you have to know what you’re trying to accomplish. In addition to a lack of direction, from what I see in the field and what the MIT coverage highlights, failure clusters around five patterns:

- Budget misallocation. Too much spend chases glam use cases in sales and marketing. Meanwhile, the clearest returns are showing up in back‑office automation where cycle times, error rates, and unit costs are easy to measure

- Flawed integration. Teams pilot a tool that sits outside daily work. Output looks noisy or brittle because the system never truly connects to data, rules, or process context. The study frames this as a learning gap rather than a model gap.

- Over‑building in house. Enterprises try to create custom models or applications from scratch and stall before production. Reports summarizing the research note that partnerships and purchased solutions succeed more often than DIY builds.

- Leadership misalignment. A central AI team owns the pilot, but functional leaders do not own outcomes or adoption. Projects never cross the last mile into everyday operations.

- Measurement gaps. No baseline, no clear exit criteria, and no adoption owner. The pilot drifts until funding or patience runs out.

Bottom line: AI fails when it lives beside rather than inside the business.

Where ROI is showing up right now

The fastest path to measurable value right now is through back‑office automation like contract processing, invoice routing, and administrative workflows with repeatable steps and known baselines. These use cases lend themselves to clear metrics: cycle time, error rate, cost per document, and vendor spend avoided.

Marketing quick wins are real, with AI compressing hours to minutes on content drafting, research support, and chat‑based ideation. To measure ROI in time saved and throughput improved, track time per asset, editorial acceptance rates, and speed to launch.

Customer‑facing workflows will continue to improve as integration matures. Start where impact is provable, then expand.

Who should lead?

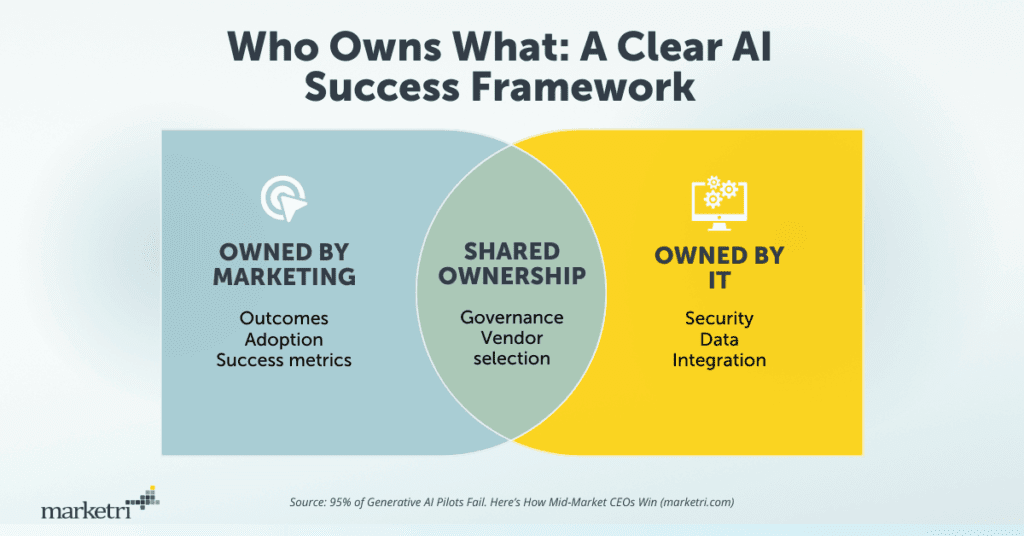

If the goal is revenue and customer experience, the roadmap belongs with marketing. Developers optimize for technical soundness while marketers optimize for growth and usability. You need both, organized clearly.

In my experience, the following structure keeps things efficient and scalable:

Here’s a quick example of a time a team wanted an “AI content assistant.” The first version wrote decent copy that did not match voice, format, or compliance rules. So Marketing re-defined the required outputs and acceptance criteria while IT wired templates, style rules, and approval checkpoints into the workflow. Adoption seamlessly followed because the tool finally worked the way the team worked.

The mid‑market playbook to avoid the 95%

Here is a simple four‑step flow you can ship in 90 days.

1) Pick one workflow and one metric

Select a single painful step inside a core process and document your current baseline with one metric of your choice. (Here are a few examples: cycle time per contract, cost per invoice processed, time to first draft.) And crucially, pick and name an individual who will own the outcome.

2) Select the vendor and the integration plan

Enterprise solutions and partnerships tend to outperform isolated internal builds, so it’s wise to go the vendor route. You should favor tools that connect cleanly to your stack, support data portability, and allow model choice. Write a lightweight plan for data governance and brand controls up front; that way, you get speed from the vendor and control from your rules.

3) Pilot 30 to 60 days with a line‑manager owner

Scope narrowly and run a weekly improvement cadence that’ll allow you to capture issues, decisions, and before‑and‑after metrics. Exit criteria should be explicit: adoption threshold achieved, target metric hit, and a documented SOP ready for training.

4) Scale at 60 to 90 days with governance

Roll out training, permissions, and monitoring, and publish the SOP. Reduce lock‑in risk with a simple model‑abstraction layer, prompt portability, and clear export paths for your data. It’ll make your life easier to track adoption in the dashboards that teams already use.

Addressing “Shadow AI”

Workers are not waiting for official AI programs to get started using AI in their daily work. Fortune coverage of the MIT report notes a “shadow AI economy” where employees at more than 90% of companies use personal chatbot accounts for work tasks, while only about 40% of companies have official LLM subscriptions.

That tells you two things: first, that people will find adaptable tools. And second, that many corporate platforms are failing to meet employees’ real needs. To avoid undesirable outcomes (and there are many), lean into a structured approach.

Here’s what I suggest.

- 30 days: inventory grassroots use, collect quick wins and risks, publish do’s and don’ts on data handling.

- 60 days: sanction two tools and pilot with your power users. Add data guardrails and brand controls.

- 90 days: roll out training and a simple request path for new use cases. Keep usage visible in your dashboards.

The in-house build question

I’m often asked if it makes sense to build in house. Though there are edge cases, my answer is usually no. Highly specialized, regulated, or IP‑critical capabilities can justify custom work, but even then, you should start with vendor models to validate the workflow and the ROI story before committing to a long development cycle. The study summaries point out that many internal projects never reach production, while partnered approaches get farther, faster.

CEO checklist you can ship in 90 days

- Choose one workflow with a measurable bottleneck.

- Assign a line manager as adoption owner.

- Set baseline and exit criteria before you start.

- Select a vendor that fits your stack and supports data portability.

- Pilot for 30 to 60 days with weekly metrics and decisions logged.

- Document the SOP and train the team.

- Scale with governance, permissions, and live dashboards.

- De‑risk vendor lock‑in with model flexibility and export paths.

What this means for mid‑market leaders

Large enterprises can afford to spin cycles on experiments, but you’ve got your own advantages. You’re closer to your processes, closer to your customers, and faster to align the right people. The MIT research underscores a simple truth: results come from integration and ownership, not from demos seen or dollars spent.

If you want a safe first win, start in the back office where baselines are clear and feedback loops are tight. If your growth goals point to marketing, I suggest starting with content acceleration and research support while you wire in the brand and compliance controls that make output usable. And as integration matures, you can graduate to customer‑facing workflows.

What’s next?

AI becomes a growth engine when you embed it in how work happens and hold it to the same standards as any core system. If you want a partner that thinks like an owner and integrates deeply with your team, Marketri’s fractional model gives you the leadership, orchestration, and vetted partnerships to move fast with confidence.

Start with Marketri’s free AI Readiness Assessment, a four-minute quiz that’ll will show you exactly where your team stands with AI.